Getting Started

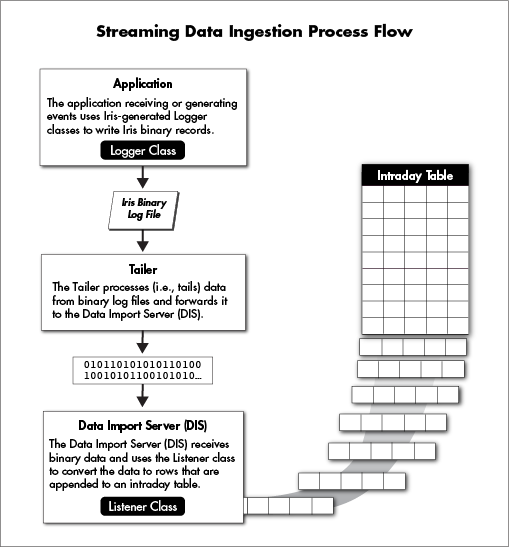

Deephaven has two main ways to ingest data: batch or streaming. Batch, or static, data is imported in relatively large chunks, usually on some schedule. Streaming, or "ticking", data arrives continuously, as events occur.

Importing batch data into Deephaven is fairly easy, as Deephaven provides built-in tools that simplify most of the required tasks. However, setting up a new streaming data source is more complicated. While there are tools in Deephaven to help with this, there are more tasks involved, as well as the need for data-source specific executables that feed new events to Deephaven processes. (See Streaming Data for details of setting up a new streaming source).

There are multiple options for configuring Deephaven to import batch data from a new source. All of the details of these options are explained in the following sections of this document, however, the simplest and quickest approach, which will work for most scenarios, is covered below.

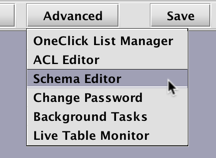

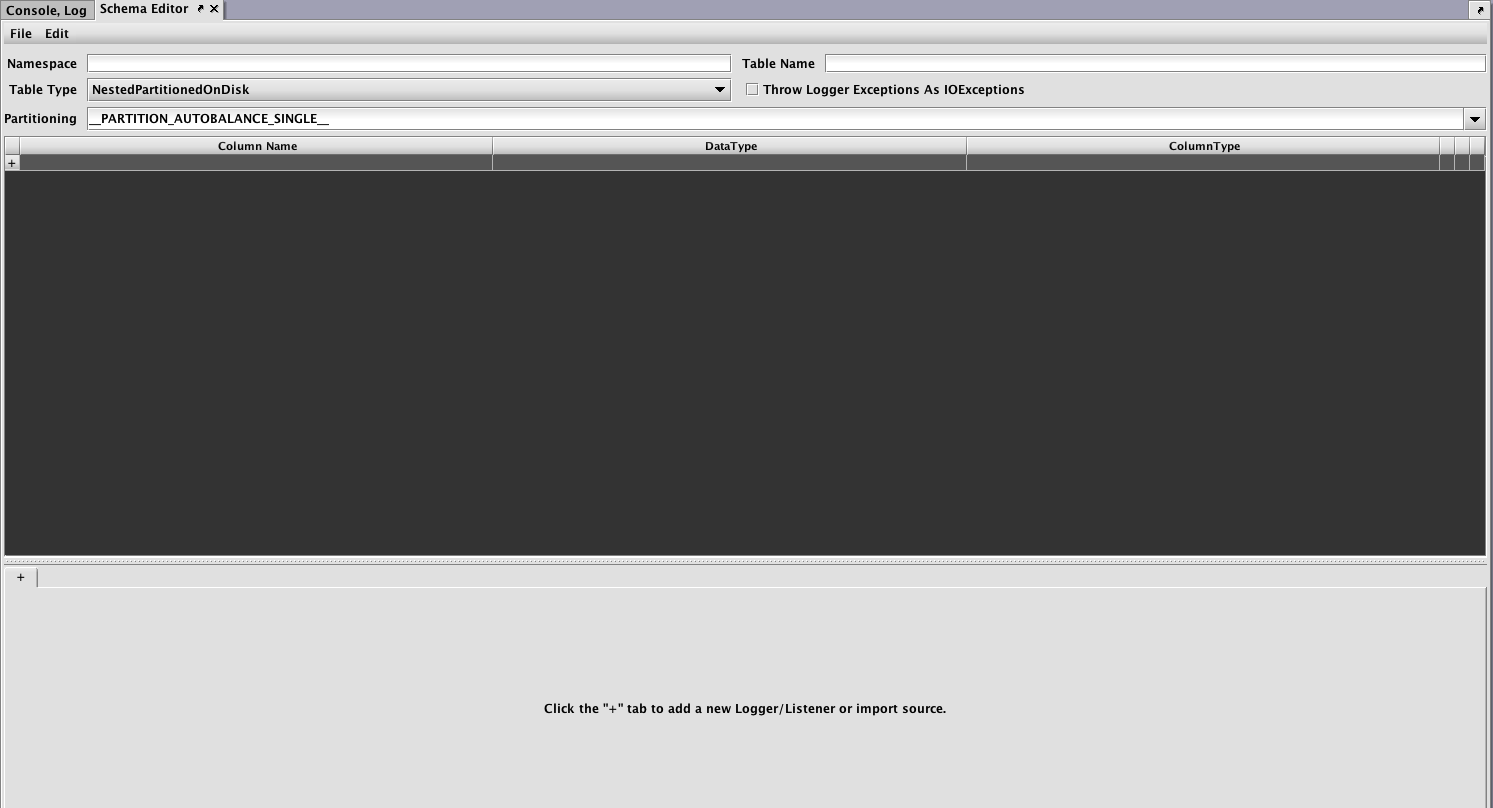

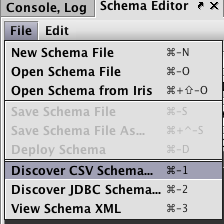

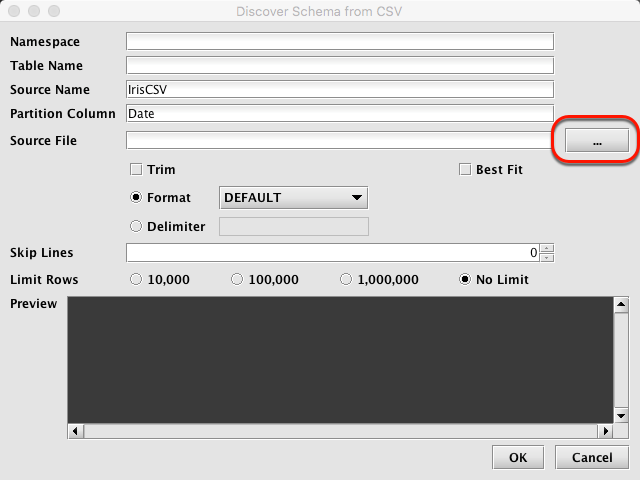

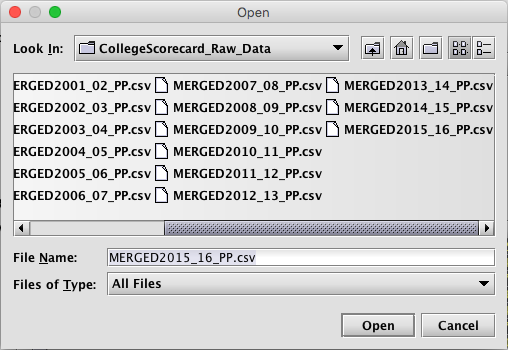

- Create a schema for the new table.

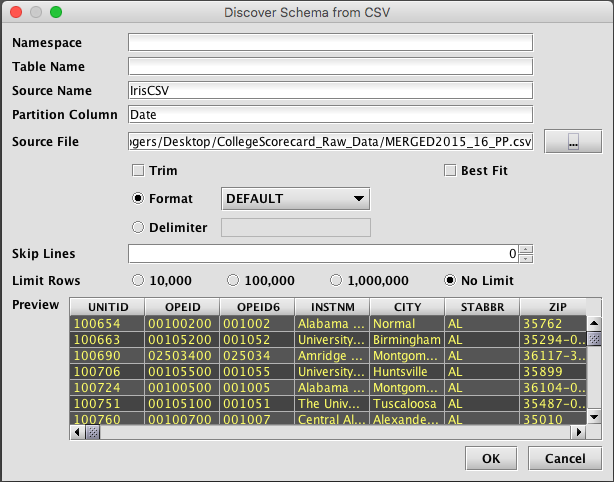

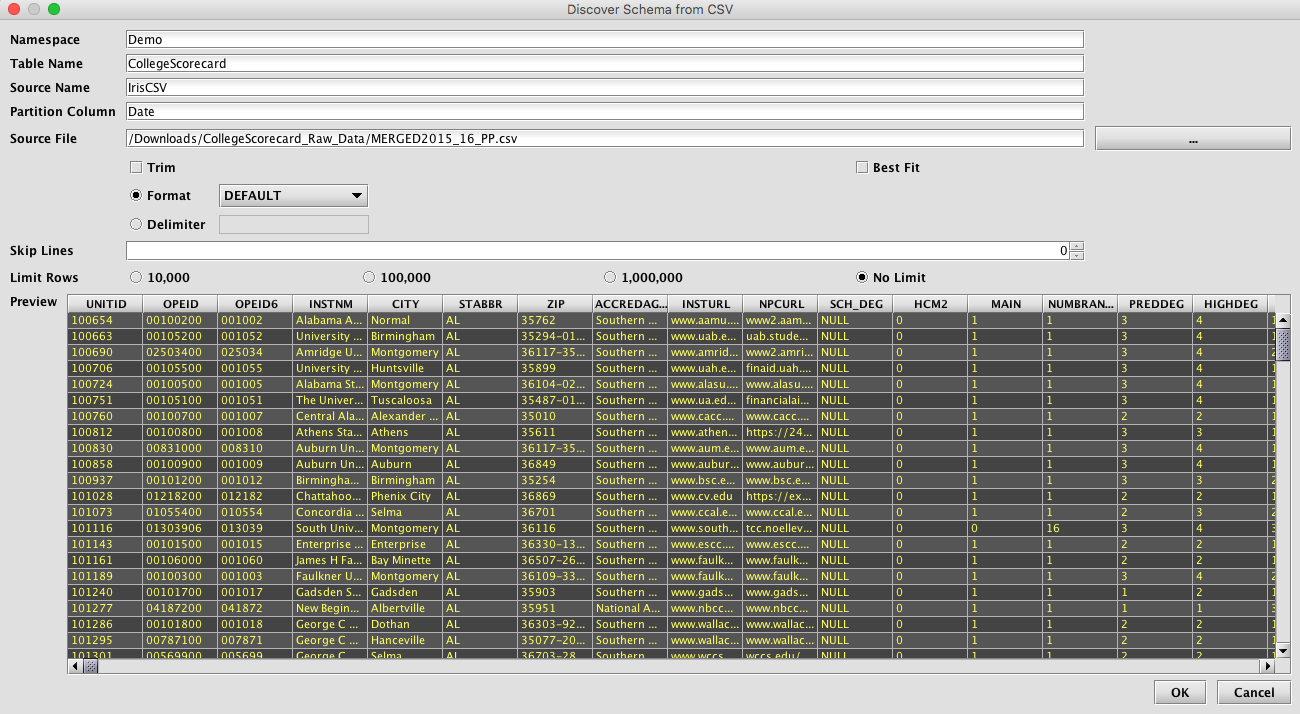

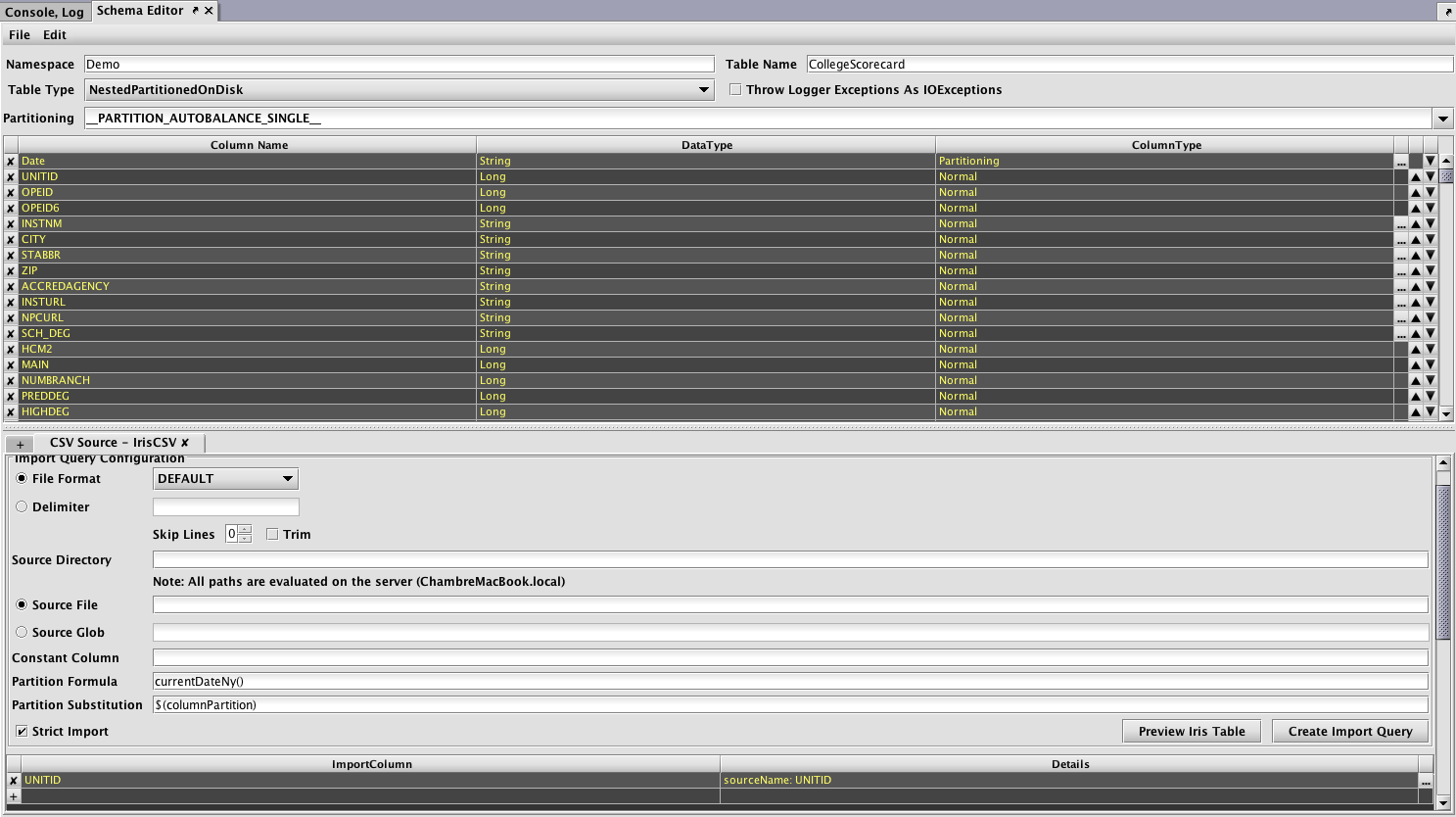

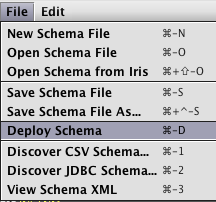

A schema describes the layout of the table - its columns and data types - and also includes instructions for importing data from different batch sources. The Schema Editor tool is the easiest way to create a new schema, especially if a sample of the data to be imported is available for the Schema Editor to inspect. - Create and schedule queries to load and process data into the new schema.

These import and merge queries can be created directly from the Schema Editor tool, or from the Persistent Query Configuration Editor.

An example of this process is presented in Example 3 - Batch Data Import Using the Schema Editor and Query-based Importing

NOTE: One consideration when importing batch data is that, by default, Deephaven will not display freshly imported batch data for today's date to users. The preferred method for making this data accessible is to merge it. Additional details about how this data is handled and other options for displaying it are covered in the next sections.

Overview

The data used with Deephaven is generally imported by a system administrator. Therefore, you should follow the instructions provided by your system administrator in regard to accessing data. However, the following is provided as an overview of how data is imported and then stored in the system.

There are two main categories of data in Deephaven: intraday and historical. Intraday data is stored in the order in which it was received - appended to a table. Historical data is partitioned, usually by date, into sets that will no longer have any new data appended, and can be arranged based on criteria other than arrival time. The merge process is used to read the intraday data and reorganize it as it writes it out as historical data. All new data coming into Deephaven is first written as intraday data. The typical flow is to import or stream new data into intraday, and then (usually nightly) merge it to historical. Once historical data has been validated, the intraday versions of the data can be removed.

Deephaven is designed so each enterprise can customize their own installation, including the data being imported and the authorization to access the data. The following diagram is a very generalized view of the data import process:

Although merging data is recommended, it is not required. Merged data can be more efficient to store, and is faster to retrieve for queries, but it is also possible to use old data that has been left unmerged in intraday.

Note: Imported data is immediately available through intraday queries (db.i) unless the data was imported with today's date as its partitioning value, and the TableDataServiceFactory.defaultMode has not been set to LOCAL.

The Merge and Data Validation Processes

All new data added to an Deephaven installation is first stored in the Intraday database. Typically this data is periodically merged into the Historical database where it stored for long-term use. The process of reorganizing time-series data from an intraday partition and writing it into a historical partition is accomplished by the com.illumon.iris.importers.MergeIntradayData class. Like the batch data import classes, this class can be used directly, or from import scripts or import queries. Again, import queries are the preferred method to use this class, as they provide an easier to use interface, scheduling, execution history, and dependency chaining.

The data merge step by itself only handles reorganizing the data and copying it from intraday to historical. It does not validate or remove the intraday version of the partition; at the current time these steps must be performed through the use of on-server scripts. Validation of merged data is done by running a validator class, to check that values are within expected ranges; e.g. that no more than a specified percentage of rows have null values in one of the fields. A typical data "lifecycle" consists of some form of ingestion to intraday, followed by merge, validation, and then, after successful validation, deletion of the intraday data, including the directory that contained the intraday partition.

See: Appendix C: Data Merging Classes for further information.

Importing Batch Data

Data Types and Sources

The following data types and sources are currently supported by Deephaven:

- Binary Import - Deephaven binary log files

- CSV - Comma-separated values

- JDBC - Java Database Connectivity

- XML - eXtensible Markup Language

- Deephaven Binary Log Files

- Other - Virtually any data type can be imported into Deephaven with the proper importer class.

Additionally, data can be imported from commercial sources such as the following:

- Quandl

- MayStreet

Please consult your system administrator for additional information about importing other data types or sources.

Import Metadata

In the simplest cases, there will be a one-to-one match of columns from a data import source to the Deephaven table into which data is being imported, and the data values will all "fit" directly into the table columns with no conversion or translation needed. In other cases, things may not be so simple.

When source and destination column names don't match, or data does need to be manipulated during import, the ImportSource section of the schema provides instructions for the importer to use in driving the import process.

Schema files created with the Schema Editor based on sample data will have an ImportSource block defined and may have needed transformation rules automatically created. Details of syntax and available mapping and transformation capabilities of the import process can be found in the ImportSource XML topic below.

Batch Import Processes

Deephaven provides batch data import classes that can read data from CSV, JDBC, XML and binary log file sources and write it to intraday tables. These classes can be used directly, or through provided scripts or import queries in the Deephaven UI. These methods are designed for any size of data and for persistent import of data that will probably be shared by multiple users. For temporary import of a single small dataset see Importing Small Datasets.

Using queries to import data through the Deephaven UI is the preferred method of using the import classes. In addition to providing a graphical user interface for creating and editing import tasks, the query-based import methods include task scheduling, dependency chaining, and a single location to view history and status for imports that have been run.

Importing Data with Queries

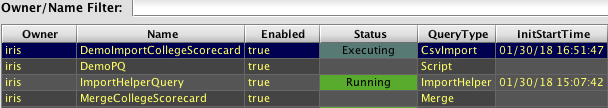

Deephaven allows you to run batch import and merge jobs via the familiar Deephaven console in the form of persistent queries.

Note: the appropriate schema files should already be deployed before this stage. Review Schemas for additional information on that process.

Data import queries enable authorized users to import and merge data through the Deephaven Console instead of using command line interfaces.

To start an import or merge process, click the Query Config button in the Deephaven Console.

When the window opens, click the New button shown on the right side of the interface. This will open the Persistent Query Configuration Editor.

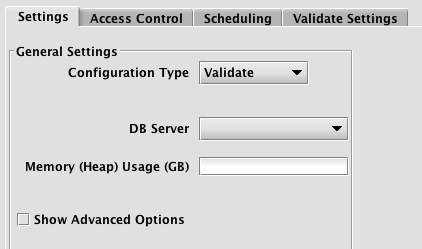

In the Settings tab, click the Configuration Type drop-down menu to reveal the options shown below.

- BinaryImport

- CsvImport

- JdbcImport

- Merge

- ReplayScript

- RunAndDone

- Script

- Validate

- XmlImport

BinaryImport, CsvImport, JdbcImport and XML are used for importing data from Deephaven binary log, CSV, JDBC, and XML data sources respectively. Merge is used for merging intraday data to historical data. Validate is used to validate data and to delete merged intraday data.These configurations are further described below.

Note: When configuring an import or merge query, it is essential that you select a DB_SERVER, which is running as the dbmerge user. The dbquery user, used for user queries, does not have permission to write to Deephaven system tables.

ReplayScript, RunAndDone and Script pertain to running scripts in the Deephaven Console. These options are further described in the section describing the Persistent Query Configuration Viewer/Editor.

BinaryImport

BinaryImport is used to import data from binary log files into an intraday partition. The binary log files must be available on the server on which the query will run; it is not for loading files on the user's system into Deephaven. The binary log files must be in the Deephaven binary log file format, usually generated by an application using a generated Deephaven logger.

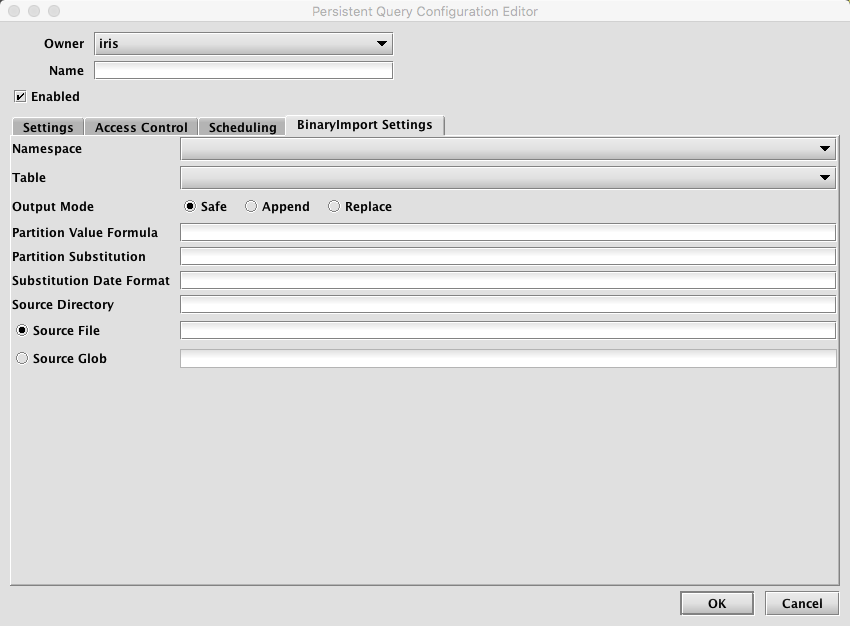

When BinaryImport is selected, the Persistent Query Configuration Editor window shows the following options:

To proceed with creating a query to import binary import files, you will need to select a DB Server and enter the desired value for Memory (Heap) Usage (GB).

Options available in the Show Advanced Options section of the panel are typically not used when importing or merging data. To learn more about this section, please refer to the Persistent Query Configuration Viewer/Editor.

The Access Control tab presents a panel with the same options as all other configuration types, and gives the query owner the ability to authorize Admin and Viewer Groups for this query. For more information, please refer to Access Control.

Clicking the Scheduling tab presents a panel with the same scheduling options as all other configuration types. For more information, please refer to Scheduling.

Clicking the BinaryImport Settings tab presents a panel with the options pertaining to importing binary log files:

BinaryImport Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Output Mode: This determines what happens if data is found in the fully-specified partition for the data. The fully-specified partition includes both the internal partition (unique for the import job) and the column partition (usually the date).

- Safe - if existing data is found in the fully-specified partition, the import job will fail.

- Append - if existing data is found in the fully-specified partition, data will be appended to it.

- Replace - if existing data is found in the fully-specified partition, it will be replaced. This does not replace all data for a column partition value, just the data in the fully-specified partition.

- Partition Formula: This is the formula needed to partition the binary file being imported. Note that BinaryImport only supports single-partition imports. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()

"2017-01-01" - Partition Substitution: This is a token used to substitute the determined column partition value in the source directory, source file, or source glob, to allow the dynamic determination of these fields. For example, if the partition substitution is "

PARTITION_SUB", and the source directory includes "PARTITION_SUB" in its value, thatPARTITION_SUBwill be replaced with the partition value determined from the partition formula. - Substitution Date Format: This is the date format that will be used when a Partition Substitution is used. The standard Deephaven date partition format is

yyyy-MM-dd(e.g.,2018-05-30), but this allows substitution in another format. For example, if the filename includes the date inyyyyddMMformat instead (e.g.,20183005), that could be used in the Date Substitution Format field. All the patterns from the Java DateTimeFormatter class are allowed. - Source Directory: This is the path to where the binary log files are stored on the server on which the query will run.

- Source File: This the name of a single binary log file to import.

- Source Glob: This is an expression used to match multiple binary log file names.

CsvImport

CsvImport is used to import data from a CSV file into an intraday partition. The CSV file must be available on the server on which the query will run; it is not for loading a file on the user's system into Deephaven.

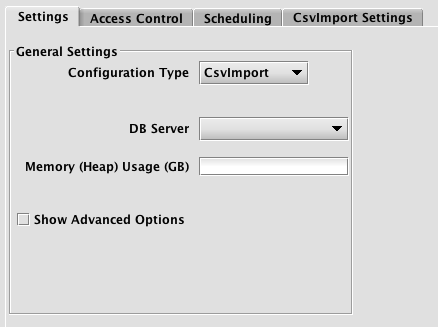

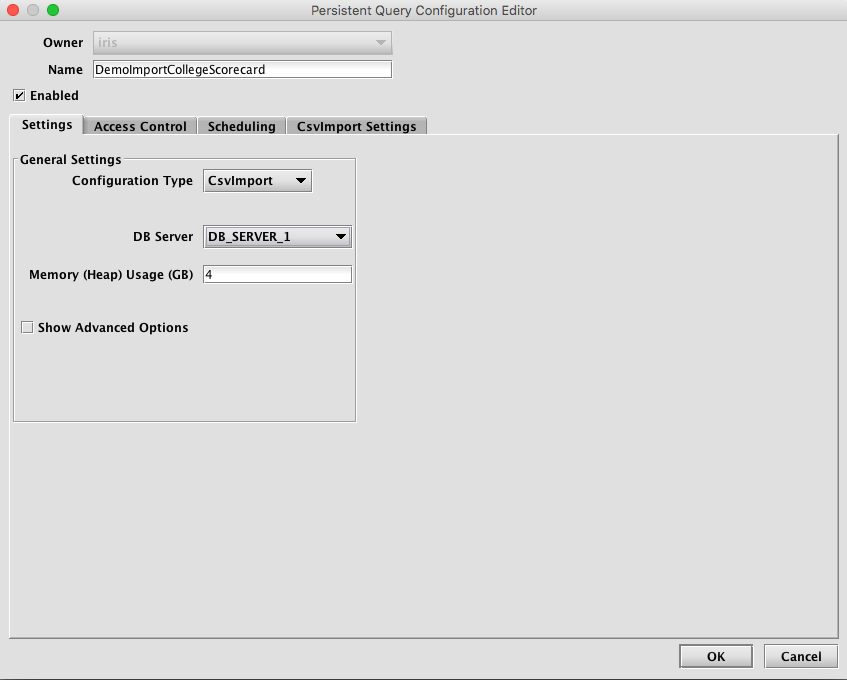

When CsvImport is selected, the Persistent Query Configuration Editor window shows the following options:

To proceed with creating a query to import a CSV file, you will need to select a DB Server and enter the desired value for Memory (Heap) Usage (GB).

Options available in the Show Advanced Options section of the panel are typically not used when importing or merging data. To learn more about this section, please refer to the Persistent Query Configuration Viewer/Editor.

The Access Control tab presents a panel with the same options as all other configuration types, and gives the query owner the ability to authorize Admin and Viewer Groups for this query. For more information, please refer to Access Control.

Clicking the Scheduling tab presents a panel with the same scheduling options as all other configuration types. For more information, please refer to Scheduling.

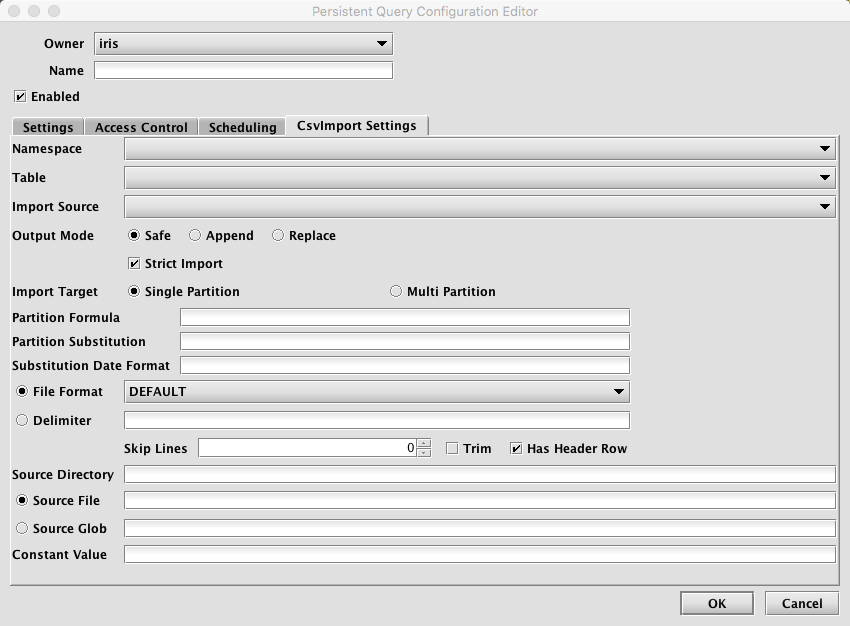

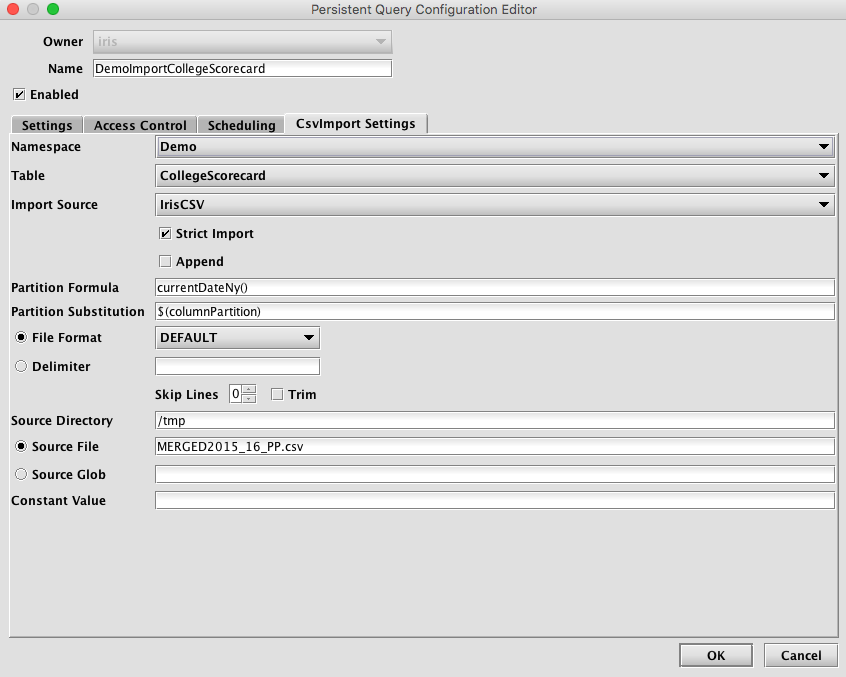

Clicking the CsvImport Settings tab presents a panel with the options pertaining to importing a CSV file:

CsvImport Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Output Mode: This determines what happens if data is found in the fully-specified partition for the data. The fully-specified partition includes both the internal partition (unique for the import job) and the column partition (usually the date).

- Safe - if existing data is found in the fully-specified partition, the import job will fail.

- Append - if existing data is found in the fully-specified partition, data will be appended to it.

- Replace - if existing data is found in the fully-specified partition, it will be replaced. This does not replace all data for a column partition value, just the data in the fully-specified partition.

- Import Source: This is the import source section of the associated schema file that specifies how source data columns will be set as Deephaven columns.

- Strict Import does not allow tables with missing column values to adjust and become Deephaven tables.

-

Single/Multi Partition: This controls the import mode. In single-partition, all of the data is imported into a single Intraday partition. In multi-partition mode, you must specify a column in the source data that will control to which partition each row is imported.

- Single-partition configuration

Partition Formula: This is the formula needed to partition the CSV being imported. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()

"2017-01-01"Partition Substitution: This is a token used to substitute the determined column partition value in the source directory, source file, or source glob, to allow the dynamic determination of these fields. For example, if the partition substitution is "

PARTITION_SUB", and the source directory includes "PARTITION_SUB" in its value, thatPARTITION_SUBwill be replaced with the partition value determined from the partition formula.Substitution Date Format: This is the date format that will be used when a Partition Substitution is used. The standard Deephaven date partition format is

yyyy-MM-dd(e.g.,2018-05-30), but this allows substitution in another format. For example, if the filename includes the date inyyyyddMMformat instead (e.g.,20183005), that could be used in the Date Substitution Format field. All the patterns from the Java DateTimeFormatter class are allowed.- Multi-partition configuration

Import Partition Column: This is the name of the database column used to choose the target partition for each row (typically "

Date"). There must be an corresponding Import Column present in the schema, which will indicate how to get this value from the source data. - File Format: This is the format of the data in the CSV file being imported. Options include

DEFAULT,TRIM,EXCEL,TDF,MYSQL,RFC4180andBPIPE*. - Delimiter: This can be used to specify a custom delimiter character if something other than a comma is used in the file.

- Source Directory: This is the path to where the CSV file is stored on the server on which the query will run.

- Source File: This the name of the CSV file to import.

- Source Glob: This is an expression used to match multiple CSV file names.

- Constant Value: A String of data to make available as a pseudo-column to fields using the CONSTANT sourceType

* Note: BPIPE is the format used for Bloomberg's Data License product.

JdbcImport

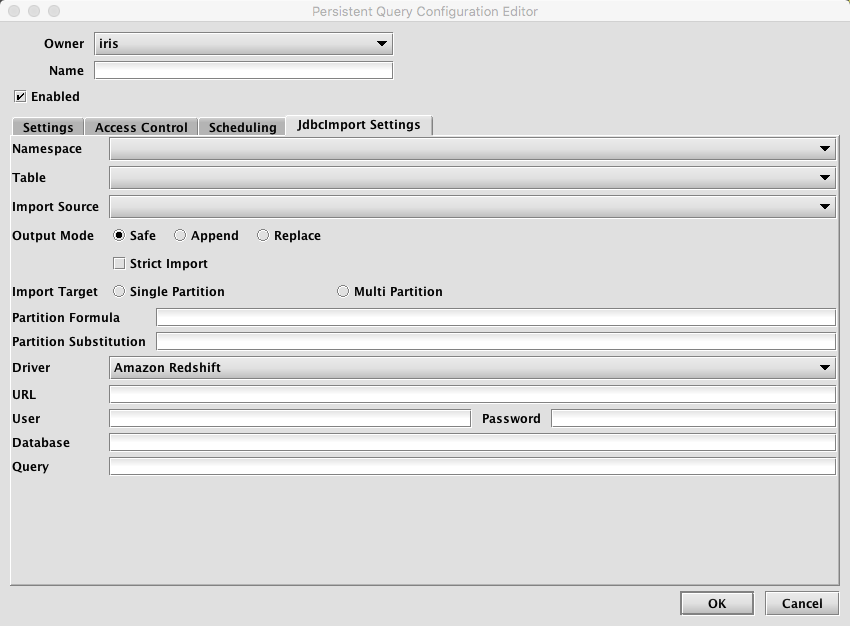

When JdbcImport is selected, the Persistent Query Configuration Editor window shows the following options:

This is similar to the options presented in the previous CsvImport window except for the JdbcImport Settings tab.

Clicking the JdbcImport Settings tab presents a panel with the options pertaining to importing from a JDBC database:

JdbcImport Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Output Mode: This determines what happens if data is found in the fully-specified partition for the data. The fully-specified partition includes both the internal partition (unique for the import job) and the column partition (usually the date).

- Safe - if existing data is found in the fully-specified partition, the import job will fail.

- Append - if existing data is found in the fully specified partition, data will be appended to it.

- Replace - if existing data is found in the fully specified partition, it will be replaced. This does not replace all data for a column partition value, just the data in the fully-specified partition.

- Import Source: This is the import source section of the associated schema file that specifies how source data columns will be set as Deephaven columns.

- Strict Import does not allow tables with missing column values to adjust and become Deephaven tables.

- Single/Multi Partition: This controls the import mode. In single-partition, all of the data is imported into a single Intraday partition. In multi-partition mode, you must specify a column in the source data that will control to which partition each row is imported.

- Single-partition configuration

- Multi-partition configuration

- Driver: This is the name of the driver needed to access the database. It defaults to

Mysql. - URL: This the URL for the JDBC connection.

- Query: This is the query detailing what data you want to pull from the database.

Partition Formula: This is the formula needed to partition the CSV being imported. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()

"2017-01-01"Partition Substitution: This is a token used to substitute the determined column partition value in the query, to allow the dynamic determination of this field. For example, if the partition substitution is "

PARTITION_SUB", and the query includes "PARTITION_SUB" in its value, thatPARTITION_SUBwill be replaced with the partition value determined from the partition formula.

Import Partition Column: This is the name of the database column used to choose the target partition for each row (typically "Date"). There must be an corresponding Import Column present in the schema, which will indicate how to get this value from the source data.

In JDBC multi-partition mode, it is highly recommended that your SQL query order the data by the partition column (e.g. "SELECT * FROM table ORDER BY date"). It is much more efficient to import large tables in partition order because the importer need not close and reopen destination partitions multiple times.

XmlImport

XmlImport is used to import data from an XML file into an intraday partition. The XML file must be available on the server on which the query will run; it is not for loading a file on the user's system into Deephaven.

Deephaven's support for XML files allows loading files into a single table. See the Schema section for more details about the layout considerations of XML files when importing into Deephaven.

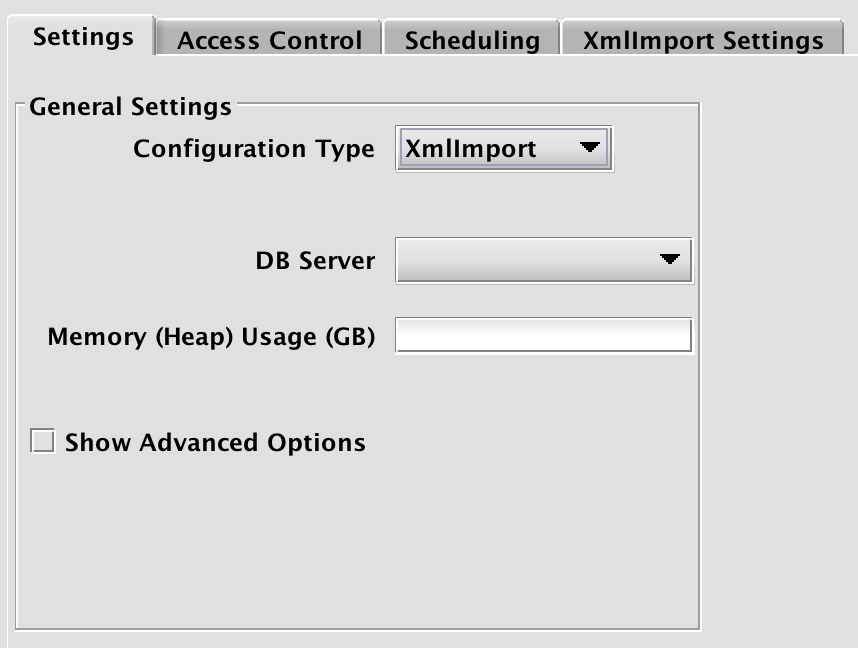

When XmlImport is selected, the Persistent Query Configuration Editor window shows the following options:

To proceed with creating a query to import a XML file, you will need to select a DB Server and enter the desired value for Memory (Heap) Usage (GB).

Options available in the Show Advanced Options section of the panel are typically not used when importing or merging data. To learn more about this section, please refer to the Persistent Query Configuration Viewer/Editor.

The Access Control tab presents a panel with the same options as all other configuration types, and gives the query owner the ability to authorize Admin and Viewer Groups for this query. For more information, please refer to Access Control.

Clicking the Scheduling tab presents a panel with the same scheduling options as all other configuration types. For more information, please refer to Scheduling.

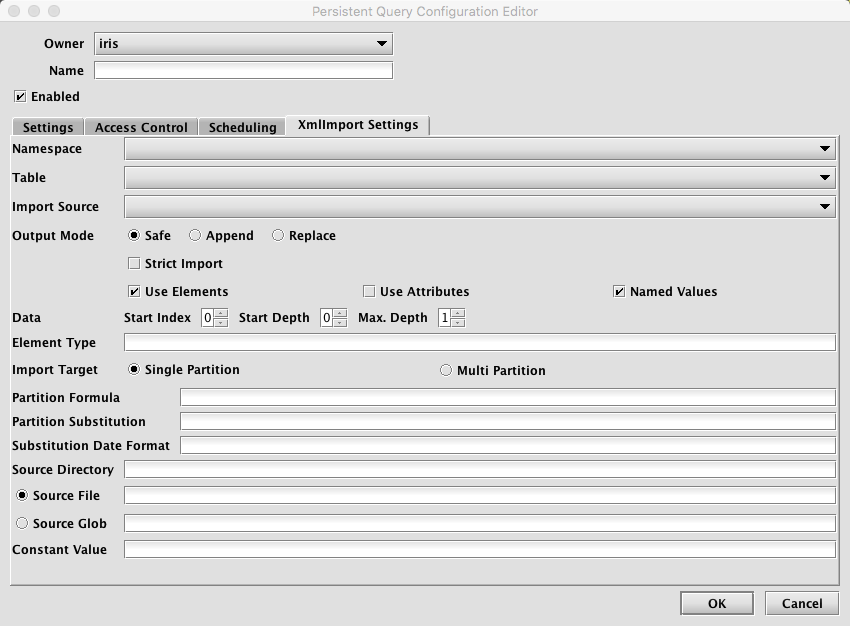

Clicking the XMLImport Settings tab presents a panel with the options pertaining to importing a XML file:

XmlImport Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Output Mode: This determines what happens if data is found in the fully-specified partition for the data. The fully-specified partition includes both the internal partition (unique for the import job) and the column partition (usually the date).

- Safe - if existing data is found in the fully-specified partition, the import job will fail.

- Append - if existing data is found in the fully-specified partition, data will be appended to it.

- Replace - if existing data is found in the fully-specified partition, it will be replaced. This does not replace all data for a column partition value, just the data in the fully-specified partition.

- ImportSource: This is the import source section of the associated schema file that specifies how source data columns will be set as Deephaven columns.

- Strict Import does not allow tables with missing column values to adjust and become Deephaven tables.

- XML File Reading Settings:

Use Elements- Take import values from the contents of XML elements. At least one ofUse ElementsorUse Attributesmust be selected.Use Attributes- Take import values from the values of XML attributes. At least one ofUse ElementsorUse Attributesmust be selected.Named Values- When checked, element or attribute names are used for column names; when cleared, values will be assigned to columns positionally in the order they are found. Note that positional values will be parsed from the XML in the order of the columns in the table. As such, the table schema for documents that do not used Named Values, must closely match the layout of columns in the XML document.

- Data:

- Start Index - The number of the element, starting with the root itself (0), from the root of the XML document where the importer will expect to find data.

- Start Depth - How many level down, under the Start Index element, the importer should traverse to find data elements.

- Max. Depth - When data elements may contain data in child elements, this determines how many levels further down the importer should traverse while looking for import values.

- Element Type - The string name of the data element type. In some cases, this may be a / delimited path to the types of elements that should be imported.

- Single/Multi Partition: This controls the import mode. In single-partition, all of the data is imported into a single Intraday partition. In multi-partition mode, you must specify a column in the source data that will control to which partition each row is imported.

- Single-partition configuration

Partition Formula: This is the formula needed to partition the XML being imported. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()

"2017-01-01"Partition Substitution: This is a token used to substitute the determined column partition value in the source directory, source file, or source glob, to allow the dynamic determination of these fields. For example, if the partition substitution is "PARTITION_SUB", and the source directory includes "PARTITION_SUB" in its value, that PARTITION_SUB will be replaced with the partition value determined from the partition formula.

Substitution Date Format: This is the date format that will be used when a Partition Substitution is used. The standard Deephaven date partition format is

yyyy-MM-dd(e.g.,2018-05-30), but this allows substitution in another format. For example, if the filename includes the date inyyyyddMMformat instead (e.g.,20183005), that could be used in the Date Substitution Format field. All the patterns from the Java DateTimeFormatter class are allowed.

- Multi-partition configuration

Import Partition Column: This is the name of the database column used to choose the target partition for each row (typically "Date"). There must be a corresponding Import Column present in the schema, which will indicate how to get this value from the source data.

- Single-partition configuration

- Source Directory: This is the path to where the XML file is stored on the server on which the query will run.

- Source File: This the name of the XML file to import.

- Source Glob: This is an expression used to match multiple XML file names.

- Constant Value: A String of data to make available as a pseudo-column to fields using the CONSTANT sourceType

In most cases, it will be easier to test XML parsing settings using the Discover Schema from XML option of the Schema Editor utility. This allows a quick preview of the results of different settings, so the correct index, element type, etc, can be easily determined.

Merge

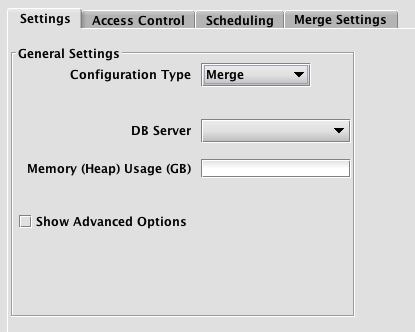

When Merge is selected, the Persistent Query Configuration Editor window shows the following options:

This is similar to the options presented in the previous CSV and JDBC configuration types window except for the new Merge Settings tab.

Note: Merges for multi-partition imports cannot be run from a persistent query at present. This must be done from the command line.

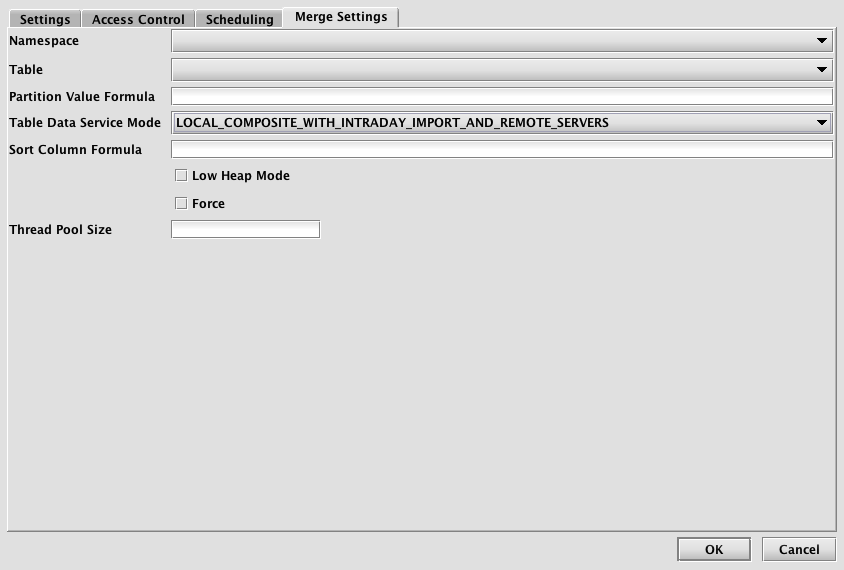

Clicking the Merge Settings tab presents a panel with the options pertaining to merging data to a file already in Deephaven:

Merge Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Partition Value Formula: This is the formula needed to partition the data being merged. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()"2017-01-01"

- Table Data Service Mode: Specifies how input data to merge should be found and read. Options include:

LOCAL- Accesses all data from the file hierarchy under the Deephaven database root directory

LOCAL_COMPOSITE_WITH_INTRADAY_PROXY_SERVER- Accesses historical data in the same manner asLOCAL, but accesses intraday data via a proxy server, i.e.,db_tdcp.LOCAL_COMPOSITE_WITH_INTRADAY_REMOTE_SERVER- Accesses historical data in the same manner asLOCAL, but accesses intraday data via a remote service, i.e.,db_ltds.LOCAL_COMPOSITE_WITH_INTRADAY_IMPORT_AND REMOTE_SERVER- Accesses historical data in the same manner asLOCAL, but accesses intraday data via a composite remote service, i.e.,db_disfor same-day system intraday data,db_ltdsfor previous system intraday data, anddb_rutsfor user intraday data.LOCAL_WITH_ACTIVE_USER_LOGGER- Accesses historical data in the same manner asLOCAL, but accesses user intraday data via a remote service, i.e.,db_ruts.

- Sort Column Formula: An optional formula to sort on after applying column groupings. If no formula is supplied, data will be in source order except where reordered by grouping.

- Low Heap Mode: Whether to run in low heap usage mode, which makes trade-offs to minimize the RAM required for the merge JVM's heap, rather than maximizing throughput

- Force: Whether to allow overwrite of existing merged data

- Thread Pool Size: The number of concurrent threads to use when computing output order (i.e. grouping and sorting) or transferring data from input partitions to output partitions. More threads are not always better, especially if there is significant reordering of input data which may degrade read cache efficiency.

Once you have configured all the settings needed for your query, click the OK button in the lower right corner of the Persistent Query Configuration Editor window.

Typically, a merge query should have a dependency on an import query configured in the Scheduling tab.

Merge queries can also be created from an import query. After creating an import query with the Schema Editor, you will be prompted to create the corresponding merge. If you have an import query without a corresponding merge, an option to Create Merge Query will be available in the Query Config panel's context (right-click) menu.

Validate

Validate queries are used to validate data that has been loaded into Deephaven, and to delete intraday data (usually after it has been merged into the historical database). When Validate is selected, the Persistent Query Configuration Editor window shows the following options:

To proceed with creating a query to validate data, you will need to select a DB Server and enter the desired value for Memory (Heap) Usage (GB).

Options available in the Show Advanced Options section of the panel are typically not used when validating. To learn more about this section, please refer to the Persistent Query Configuration Viewer/Editor.

The Access Control tab presents a panel with the same options as all other configuration types, and gives the query owner the ability to authorize Admin and Viewer Groups for this query. For more information, please refer to Access Control.

Clicking the Scheduling tab presents a panel with the same scheduling options as all other configuration types. For more information, please refer to Scheduling.

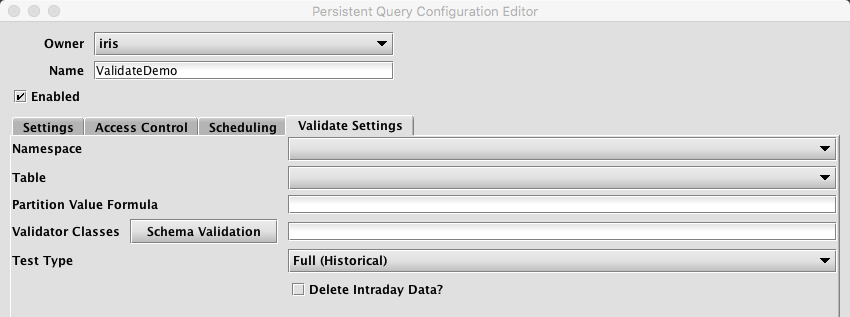

Selecting the Validate Settings tab presents a panel with the options pertaining to validating data, as shown below.

Validate Settings

- Namespace: This is the namespace for which data will be validated.

- Table: This is the table for which data will be validated.

- Partition Formula: This is the formula needed to determine the partition being validated. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()

"2017-01-01" - Validator Classes: This is the list of classes to be run to validate the data. If Schema Validation is selected, the default schema-based validation class will be selected, in which case the validation from the table's schema will be used. If no classes are chosen, then no validation will be performed (this may be useful if the query is only being used to delete intraday data).

- Test Type: this determines the type of test being run. Options include the following:

- Simple (Intraday) - runs a simple test against intraday data.

- Full (Intraday) - runs the full test suite against intraday data.

- Full (Historical) - runs the full test suite against historical data.

- Both (Intraday and Historical) - runs the full test suite against both intraday and historical data.

- Delete Intraday Data?: When selected, the corresponding intraday data will be deleted. If a validator is selected, the intraday data will be deleted only if all validation succeeds. If no validator is selected, the intraday data will be deleted when the query runs.

When a Validate query fails, the first validation failure exception is shown in the query panel's ExceptionDetails column, along with the total number of failures. Additional failures are not shown in the query panel, but must be retrieved from the text log, or from the Process Event Log for the worker that ran the query.

The following example query retrieves the failed test case details from the Process Event Log for a specific worker. The worker name should be visible in the query panel:

pelWorker=db.i("DbInternal", "ProcessEventLog").where("Date=currentDateNy()", "Process=`worker_63`", "LogEntry.contains(`FAIL`)")

Bulk Data Ingestion

Bulk data ingestion enables an administrator to easily import, merge, and validate data for multiple partition values. Instead of creating individual queries for each dataset, the bulk ingestion process enables users to add Bulk Copy options to an existing Import, Merge and/or Validation query configuration.

Users start with an existing persistent query - typically an Import query used for day-to-day data ingestion. Bulk Copy options/parameters can then be added to the query configuration to specify the range of partitions that need to be imported - typically a range of dates. Once these options are entered, Deephaven automatically creates individual, transient (short-lived) queries - one for each data partition - that will run when resources permit to import each individual dataset. Once each dataset has been imported, the transient query used to import that dataset will be erased.

Additional options are also available for simultaneously creating the respective Merge and Validation queries, which will also run in order (as resources permit) following the completion of the data import process. The Bulk Merge and Bulk Validate queries are dependent on the earlier queries in the chain. For example, a Bulk Merge query for a given partition value will only run if the Bulk Import query successfully completed, and a Bulk Validate query will run only if the Bulk Merge query successfully completed.

For example, to load 100 day's worth of CSV data in bulk (e.g.,100 files), the existing day-to-day CSV import query for that data could be chosen as the base query. The range of partitions, 100 different business dates in this case, is then added to the Bulk Copy options for the query. Once the new parameters are entered, Deephaven generates 100 individual transient queries to handle the import of those 100 datasets. These queries run whenever resources are available, thereby automating the entire process of importing a year's worth of data. Furthermore, merge and data validation queries for each dataset can also be generated in the same process.

Configuring the Bulk Copy Configuration

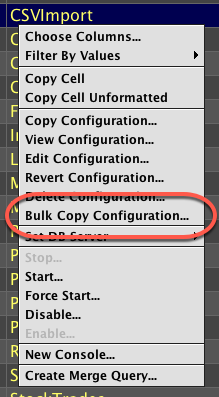

Open the Query Config panel in Deephaven. Right-clicking on any Import, Merge, or Validate query will generate the menu shown below. Select Bulk Copy Configuration.

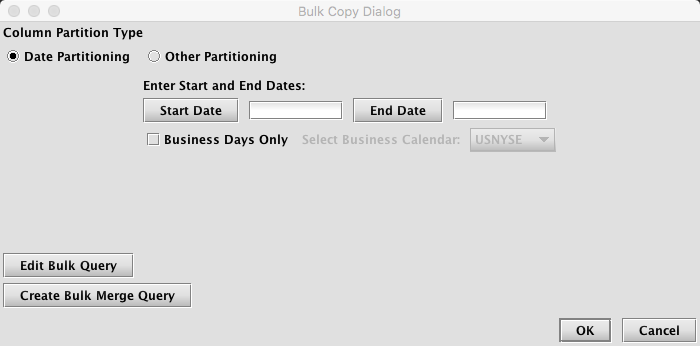

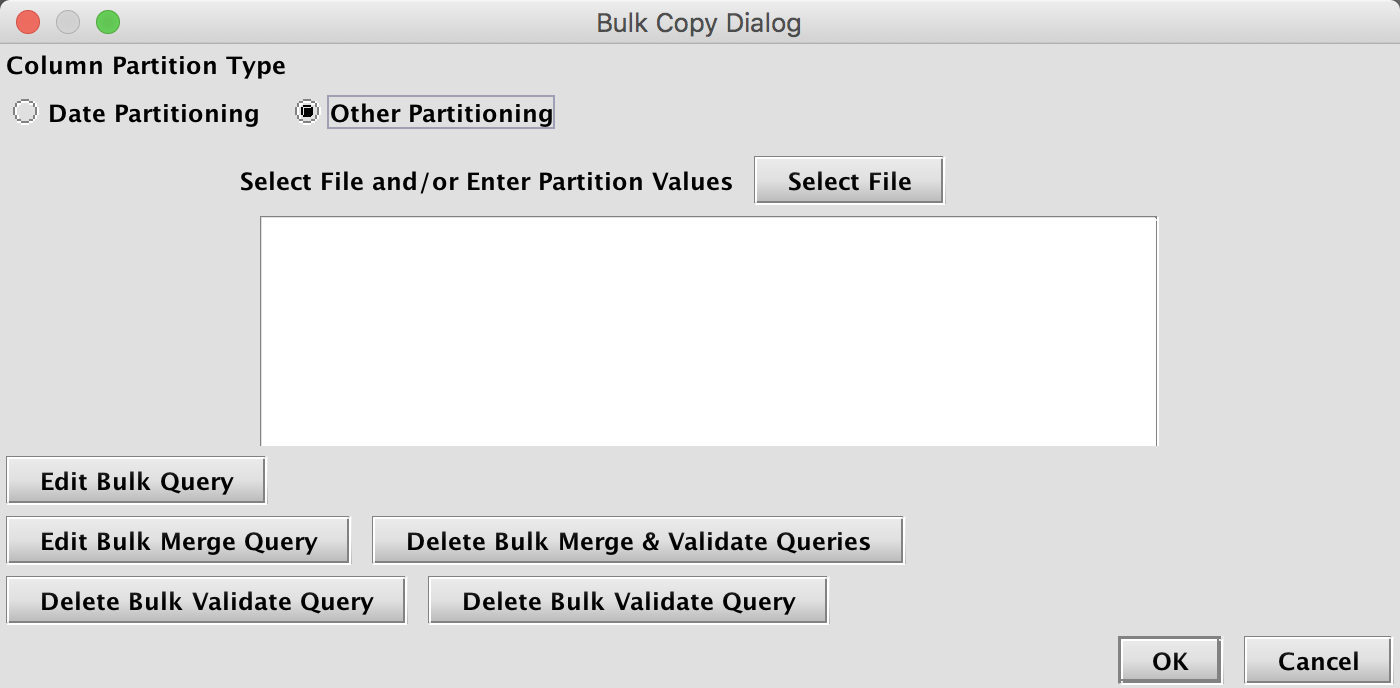

This opens the Bulk Copy Dialog window, which is shown below.

To begin, first choose either Date Partitioning or Other Partitioning.

If Date Partitioning is chosen, the following options are presented:

- Start Date: this is the starting date for the bulk operation, in

yyyy-MM-ddformat. When selected, Start Date will display a date chooser. - End Date: this is the ending date for the bulk operation, in

yyyy-MM-ddformat. When selected, End Date will display a date chooser. - Business Days Only: if selected, then only business days will be used for the bulk operation. Queries will not be created for weekends and holidays.

- Business Calendar: if the Business Days Only checkbox is selected, then a Business Calendar must also be chosen.

Depending on the type of query configuration originally chosen (Import, Merge, or Validate), the following additional buttons may appear in the Bulk Copy Dialog window:

- Edit Bulk Query: Selecting this option will allow you to edit the parameters for the bulk version of the underlying Import, Merge or Validate query. Edits are typically made to change the scheduling or other parameters. However, edits will only apply to the new bulk queries being processed The underlying query that was copied earlier will not be impacted.

- Create Bulk Merge Query: If the original configuration is an Import query, selecting Create Bulk Merge Query allows the user to create a set of Merge queries that will run for the same partition values used for the bulk import process. This set of Merge queries will run only when its respective set of Import queries successfully completes.

When selected, the Persistent Query Configuration Editor will open and present options to configure the Bulk Merge query. If a Merge query already exists that uses this namespace/table, the settings for that Merge query will be copied into the base settings for the new Bulk Merge query. Otherwise, the setting used for the new Bulk Merge query will be based on the original Import query. In either case, the settings can be edited by selecting Edit Configuration in the Bulk Copy Dialog window. - Delete Bulk Merge Query: This option appears only if a Bulk Merge Query has been created. Selecting Delete Bulk Merge Query will delete the Bulk Merge query associated with this Bulk Import process. Note: If there is a corresponding Bulk Validate query for the Bulk Merge query, both will be deleted when Delete Bulk Merge Query is selected.

- Create Bulk Validate Query: If the original configuration is a Merge query, selecting Create Bulk Validate Query allows the user to create a set of Validate queries that will run for the same partition values used for the Bulk Merge process. This set of Validate queries will run only when its respective set of Merge queries successfully completes.

When selected, the Persistent Query Configuration Editor will open and present options to configure the Bulk Validate query. If a Validate query already exists that uses this namespace/table, the settings for that Validate query will be copied into the base settings for the new Bulk Validate query. Otherwise, the setting used for the new Bulk Validate query will be based on the original Merge query. In either case, the settings can be edited by selecting Edit Configuration in the Bulk Copy Dialog window. - Delete Bulk Validate Query: This option appears only if a Bulk Validate Query has been created. Selecting Delete Bulk Validate Query will delete the Bulk Validate query associated with this Bulk Merge process.

- Delete Merge & Validate Queries: This button is only shown when Bulk Merge and Bulk Validate queries have been created. Selecting it removes both. A Bulk Validation query cannot run without a corresponding Bulk Merge query.

If Other Partitioning is chosen, the following options are presented:

When Other Partitioning is selected, the user must input a list of chosen partition values in the empty text field shown. These can be added manually (typed directly into the window) with each partition value listed on a new line. Or, Select File can be used to choose and then open a file. The file's contents will be read into the window and used to determine the partitioning values. Each line in the file will be a separate column partition value.

Depending on the type of query configuration originally chosen (Import, Merge, or Validate), the following additional buttons may appear in the Bulk Copy Dialog window:

- Edit Bulk Query: Selecting this option will allow you to edit the parameters for the bulk version of the underlying Import, Merge or Validate query. Edits are typically made to change the scheduling or other parameters. However, edits will only apply to the new bulk queries being processed The underlying query that was copied earlier will not be impacted.

- Create Bulk Merge Query: If the original configuration is an Import query, selecting Create Bulk Merge Query allows the user to create a set of Merge queries that will run for the same partition values used for the bulk import process. This set of Merge queries will run only when its respective set of Import queries successfully completes.

When selected, the Persistent Query Configuration Editor will open and present options to configure the Bulk Merge query. If a Merge query already exists that uses this namespace/table, the settings for that Merge query will be copied into the base settings for the new Bulk Merge query. Otherwise, the setting used for the new Bulk Merge query will be based on the original Import query. In either case, the settings can be edited by selecting Edit Configuration in the Bulk Copy Dialog window. - Delete Bulk Merge Query: This option appears only if a Bulk Merge Query has been created. Selecting Delete Bulk Merge Query will delete the Bulk Merge query associated with this Bulk Import process. Note: If there is a corresponding Bulk Validate query for the Bulk Merge query, both will be deleted when Delete Bulk Merge Query is selected.

- Create Bulk Validate Query: If the original configuration is a Merge query, selecting Create Bulk Validate Query allows the user to create a set of Validate queries that will run for the same partition values used for the Bulk Merge process. This set of Validate queries will run only when its respective set of Merge queries successfully completes.

When selected, the Persistent Query Configuration Editor will open and present options to configure the Bulk Validate query. If a Validate query already exists that uses this namespace/table, the settings for that Validate query will be copied into the base settings for the new Bulk Validate query. Otherwise, the setting used for the new Bulk Validate query will be based on the original Merge query. In either case, the settings can be edited by selecting Edit Configuration in the Bulk Copy Dialog window. - Delete Bulk Validate Query: This option appears only if a Bulk Validate Query has been created. Selecting Delete Bulk Validate Query will delete the Bulk Validate query associated with this Bulk Merge process.

- Delete Merge & Validate Queries: This button is only shown when Bulk Merge and Bulk Validate queries have been created. Selecting it removes both. A Bulk Validation query cannot run without a corresponding Bulk Merge query.

When a Bulk Import, Merge or Validate query is saved, each query is given the name of its base query, with the partitioning value appended with a "-". For example, a Bulk Import query might be named MyImport-2017-01-01.

ImportSource XML

Additional metadata to control and modify the import process is added in an ImportSource XML block of the schema. This is demonstrated below, with an expanded version of the previous schema:

<Table name="CsvNames5" namespace="Import" storageType="NestedPartitionedOnDisk">

<ImportSource name="JDBC1" type="JDBC">

<ImportColumn name="FirstName" sourceName="first_name" />

<ImportColumn name="LastName" sourceName="last_name" />

<ImportColumn name="Gender" sourceName="gender" />

<ImportColumn name="Race" sourceName="race" />

<ImportColumn name="FirstNameLength" sourceName="first_name" formula="FirstNameLength.length()" sourceType="String" />

</ImportSource>

<Partitions keyFormula="__PARTITION_AUTOBALANCE_SINGLE__"/>

<Column name="Date" intradayType="none" dataType="String" columnType="Partitioning" />

<Column name="FirstName" dataType="String" columnType="Normal" /><Column name="LastName" dataType="String"columnType="Grouping" />

<Column name="Gender" dataType="String" columnType="Normal" />

<Column name="Race" dataType="String" columnType="Normal" />

<Column name="FirstNameLength" dataType="int" columnType="Normal" />

<Column name="FLNameRatio" dataType="double" columnType="Normal" />

</Table>

Multiple ImportSource blocks can be added to allow various import instructions for different sources or types of import. The type attribute is required; currently supported values are "CSV","JDBC" and "XML". The name attribute is optional. When the import runs, if no ImportSource name is specified, the importer will use the first ImportSource block that matches its type. If no matching block is found (for type, and, if specified, for name), then the import will attempt to auto-match columns as it would if no ImportSource blocks existed.

To support multi-partition imports, you must have an ImportColumn for the partition column indicating how to partition each row (e.g., <ImportColumn name="Date" sourceName="trade_date"/> where trade_date is the CSV/JDBC column name).

An ImportSource block can contain multiple types of entries, including ImportColumn, Imports, maxError, arrayDelimiter and ImportTransform. These options are described below:

|

Entry Type/ Attribute |

Description |

|---|---|

|

|

Each entry describes how to map values for one column from the source data to the target table's schema |

|

The name of the target column in the Deephaven table, which must exactly match the name attribute of one of this schema's Column elements. This attribute is required; others are optional. Note: An |

|

The name of the column in the source data whose values should be mapped into the target column specified by name. This is used for mapping from a different column name in the source table, such as when a source name is not a valid Deephaven column name. Also use this attribute on the partition column when configuring multi-partition imports. |

|

The data type of the source data. This is used when data types must be translated during the import process. If As an example, |

|

Indicates whether null values will be accepted for this column. If the nullable attribute is present, it must be " Note: for primitive types, which do not natively support null values, the database uses reserved values to represent null. Null default values from |

|

This allows specification of Java code to do simple manipulations of data based on the source value itself. In the example noted earlier, the |

|

Similar to formula, transform creates a new function during the import using the details of the |

|

The value to use when import or parsing of the source value fails or the source is null. The default value must be type compatible with the target column type; for boolean columns, this would be lowercase " |

|

The full name of a custom class to use as the field writer for the column. Custom field writer classes must derive from the |

|

(CSV only) If this attribute is set to " |

|

(CSV only) Allows overriding the cache size for columns that will be cached. The default size is 1024 entries. |

|

Other attributes as needed to control transform functions. (e.g., |

|

The packages to import. A default set of packages is imported to support built-in functions and import tasks, but additional packages can be specified to provide other functions or support custom transforms. Imports can be just the qualified package/class name, or the full import Beside the internal imports used by the field writers, these imports are automatically added in the field writer context:

|

|

|

|

The maximum number of allowed parsing errors before failing the import. The default is 0. Allowed errors that will increment |

|

|

A string to be used as a delimiter when parsing array data. The default delimiter is a comma. |

|

|

See Custom Transforms below |

|

The name of a custom transform function, and will be the name sought when an |

|

The Java code that will be compiled as part of the setter for an |

Custom Transforms

Custom transforms can be added to the ImportSource block as ImportTransform elements. These elements must:

- Have a

Bodyelement that contains the text of the transform function - Have a

nameattribute that matches the name used in the transform attribute ofImportColumnsthat use the transform - Implement a

getFunctionfunction that returns a function matching the specification of the interface

At runtime, getFunction is passed to the ImportColumnDefinition of the column that is being set, and a boolean value indicating whether strict (true) or permissive (false) data checking has been selected. The ImportColumnDefinition allows getFunction to access details like the target column name, the target column type, and any ImportColumn details by using getImportColumn(), which provides access to functions such as getAttributeValue().

The following shows an example of a custom transform element that could be specified in the schema (Note: this is actually the built-in dbDateTimeFromLong transform):

<ImportTransform name="Sample">

<Body>

getFunction(ImporterColumnDefinition col, boolean strict)

{

String precision = col.getImportColumn().getAttributeValue("transformPrecision");

switch (precision) {

case "millis":

return (long value) -> new DBDateTime(DBTimeUtils.millisToNanos(value));

case "micros":

return (long value) -> new DBDateTime(DBTimeUtils.microsToNanos(value));

case "nanos": return DBDateTime::new;

default: return null;

}

</Body>

</ImportTransform>

When compiling the transform function, the target, and, if defined, source, data types are used to find a suitable Java functional interface with which to declare the getFunction. In the case of the example above, the target column type is DBDateTime, and the sourceType="long" attribute is added to the ImportColumn. This combination of source and target types is then used to create and execute the resultant function as a LongFunction<DBDateTime>.

A much simpler example follows:

<ImportTransform name="Sample">

<Body>

getFunction(ImporterColumnDefinition column, Boolean strict) {

return (long value) -> (2 * value);

}

</Body>

</ImportTransform>

If this is used for a column whose type is long, this will be mapped to a LongUnaryOperator, which takes a long and returns a Long. If the sourceType is not specified, the importer will attempt to use the target column type for matching a function interface.

If the function being returned from getFunction has a declared return type, this return type must exactly match the target column type for which the transform will be used. For example, a transform that will provide values for a Long column and that has a declared return type, must be declared Long, not long. However, the simpler, and recommended, implementation is to include only the transform body in the return from getFunction, so the system can perform implicit type conversion when compiling the transform.

Primitive Types and Boxed Types in Transforms

Not every combination of primitive types is supported by the Lambda interfaces used to pass transform functions into field writers. The supported combinations for unboxed primitive types are any combination of source and target of int, double, and long.

Therefore, a transform that reads a long value from the source and transforms it to a double value in the target table would use the primitive types of long and double.

However, a transform that reads a byte value and writes a short value would not be able to use primitive types. The importer will map this transform as a Function<Byte, Short> Note the capitalization. Byte and Short are the boxed (class-based) versions of the primitive types byte and short. This means there is extra overhead in the transform process to use the larger class-based versions of the data types.

Note: This concern with boxing of primitive types is only applicable to transforms. If the data can be implicitly converted (e.g., map a short to an int), or converted with a formula, then no boxing occurs. If the translation is too complex for a formula, another option for such primitive types is to write a custom FieldWriter. Since the FieldWriter classes are not interfaced through Lamdba functions, they have no limitations on which primitive data types they can use for source or target.

Another thing to be aware of is that primitive types that have a "null" default will be set to values from com.illumon.iris.db.tables.utils.QueryConstants when their sources are null. While these may be boxed when they are passed into a transform, they will not be automatically converted to true null values. If special handling is needed for null values in the transform, either check for equality to the QueryConstants values, or use a boxed type for the sourceType property of the column so these values will be preserved as null.

Array Types

The importers support long[] and double[] array types. From the source, these are expected to be strings (either a string field from JDBC or a column value in CSV). Formatting of the string is as a bracketed list of values. Brackets can be square brackets [ ], parentheses ( ), or curly braces { }. The delimiter can be specified with arrayDelimiter, but the default delimiter is a comma. Empty arrays [] are also supported.

An example array of double strings follows: [1.5, 6.4, 8.0, 2.2322, 88, 16.02]

Custom FieldWriter Classes

Custom FieldWriter classes can be used where a field writer needs to use values from multiple source columns, or where it is more desirable to package transform logic into a FieldWriter class rather than in an in-line transform or imported code. Custom field writers must extend the source-specific FieldWriter class (i.e., CsvFieldWriter for CSV imports or JdbcFieldWriter for JDBC imports.) They must implement a specific constructor, and must also implement the processField method.

An example of a custom field writer for JDBC follows:

public class JdbcMultiColumnExample extends JdbcFieldWriter{

private final RowSetter setter;

private final ResultSet rs;

private final ImporterColumnDefinition column;

private int rowCount;

//This is the constructor format that is required for custom JdbcFieldWriters

public JdbcMultiColumnTest(Logger log, boolean strict, ImporterColumnDefinition column, RowSetter setter, ResultSet rs, String delimiter) {

super(log, column.getName());

this.setter = setter;

this.column = column;

this.rs = rs;

rowCount = 0;

}

@Override

public void processField() throws IOException {

try {

setter.set(rs.getString("FirstName") + " " + rs.getString("LastName"));

rowCount++;

} catch (SQLException e) {

throw new RuntimeException("Failed to concatenate FirstName and LastName for target field: " + column.getName());

}

}

}

A custom field writer for CSV would be similar, but would not include RecordSet in its constructor arguments, and would require CsvRecord as an argument for processField.

JdbcFieldWriters must extend JdbcFieldWriter and implement a constructor that accepts:

Logger.class= logger instance passed down from mainImporterColumnDefinition.class= column definition of column to which this field writer will be attached (includingImportColumn, and target information)RowSetter.class=rowsetterfor the target columnResultSet.class= JDBC result set with the current row. The field writer should only read values from result set metadata and current row data, not change the position of the result set or close it.String.class= delimiter value used for array parsing functionsBoolean.class= strict value to indicate whether data checking is strict (true) or relaxed (false)

CsvFieldWriters must extend CsvFieldWriter and implement a constructor that accepts:

Logger.class= logger instance passed down from mainImporterColumnDefinition.class= column definition of column to which this field writer will be attached (includingImportColumn, and target information)RowSetter.class= row setter for the target columnString.class= delimiter value used for array parsing functionsBoolean.class= strict value to indicate whether data checking is strict (true) or relaxed (false)

Built-in Functions and Transforms

Some functions and transforms are built into the importer framework and can be used when designing ImportSource entries. Transforms often require other attributes to control their behavior. Functions can be used in formulae; transforms can be used in transforms.

dbDateTimeFromLong (transform)- takes a long input (offset from Unix epoch) and returns aDBDateTime.The attributetransformPrecisionshould be set to "millis", "micros", or "nanos" to indicate the units of the long offset value. For example,transformPrecision="millis"means that the long value from the source is the number of milliseconds from Unix epoch. RequiressourceType=longto match the expected input of the transform.new DBDateTime (function)- this is the constructor forDBDateTimeobjects. By default it takes a long offset of nanoseconds from Unix epoch. Additionally,DBDateTimeUtils.millsToNanos()andDBDateTimeUtils.microsToNanos()are available for converting other precisions of offsets to nanoseconds. Standard Java date and time parsing and conversion functions can be used to create a long Unix epoch offset from other formats; the result of which can then be passed to this function to store asDBDateTimevalue. java.time.* and java.time.format.DateTimeFormatterare included in the standard imports.ZonedDateTime.parsecan be used with various formats to parse strings intoZonedDateTimes; and theZonedDateTime.toInstant().toEpochMilli()call can then be used, for example, to convert to long milliseconds to pass to this creator. 'dbDateTimeFromTimestamp (function)- takes a Java Timestamp and returns aDBDateTimeused internally, automatically, when importing JDBC datetime types toDBDateTimedestinationcolumns.DBTimeUtils.convertDateTime (function)- takes a String and returns aDBDateTime. Expects a string of the form: YYYY-MM-DDThh:mm:ss.nnnnnnnnn TZ. One use of this function would be to convert dates that had originally been exported to CSV from a Deephaven system.enumFormatter (transform)- takes an int input and returns a String, based on lookup from an enum defined in the system columns file. The attributetransformColumnSetis the String column set name to match in the columns file. The attributetransformEnumis the String name of the enum under the column set to match in the columns file. RequiressourceType=inttomatch the expected input of the transform.accountingInt (function)- takes a String and converts to an int. Converts enclosing parentheses to a negative value. Removes thousands separators. Expects US number formatting (comma for thousands separator). RequiressourceType=Stringto match the expected input of the function.accountingLong (function)- takes a String and converts it to a long. Converts enclosing parentheses to a negative value. Removes thousands separators. Expects US number formatting (comma for thousands separator). RequiressourceType=Stringto match the expected input of the function.accountingDouble (function)- takes a String and converts it to a double. Converts enclosing parentheses to a negative value. Removes thousands separators. Expects US number formatting (comma for thousands separator). RequiressourceType=Stringto match the expected input of the function.stripFirstCharacter (function)- takes a String and returns a String. Strips the first character, assuming the String has at least one character. No overrides of sourceType needed because input and output types match.parseBoolean (function)- takes a String and returns a Boolean. Single character T/F 1/0 t/f converted to Boolean true or false. Strings (case-insensitive) true or false converted to Boolean true or false. Used internally, automatically, when reading a CSV to a destination column that is Boolean. Requires asourceType=Stringfor explicit use in custom formulae.getLongArray (function)- takes a String and returns an array of long values. Two arguments - first is the String of array values, second is a String delimiter to parse the values. Input string is expected to be enclosed - normally by square brackets. Only single-dimensional lists (arrays) are supported; no matrices. Used internally, automatically, when importing to along[]column. RequiressourceType=Stringfor explicit use in custom formulae.getDoubleArray (function)- takes a String and returns an array of double values. Two arguments: first is the String of array values, second is a String delimiter to parse the values. Input string is expected to be enclosed normally by square brackets. Only single-dimensional lists (arrays) are supported; no matrices. Used internally, automatically, when importing to adouble[]column. RequiressourceType=Stringfor explicit use in custom formulae.

Scripting Imports and Merges

The most flexible method for importing data into Deephaven is via Groovy/Python scripting. Import scripts may be deployed via the command line or through a RunAndDone persistent query. Import tasks are most easily executed from a script by using a "builder" class. The underlying import logic is identical to that accessible from the command line tools and persistent queries.

Command Line Execution

To run a script from the command line, use the iris_exec program as follows:

/usr/illumon/latest/bin/iris_exec run_local_script -- -s ./script.groovy -h <merge server host> -p <merge server port>

where ./script.groovy is a local script file to execute on the server.

See Running Local Scripts for more details on options.

Important Notes

- When importing CSV files, the source file(s) paths are local to the server and must be readable by the user that runs the query workers (typically dbquery/dbmerge depending on which server you use).

- Importing and merging data requires the appropriate write permissions. This means these scripts should be run against a "merge server", not a "query server". Since the latter is the default in the local script runner, you must specify an alternate host and/or port to reference a merge server. See the

-queryHostand-queryPortoptions torun_local_script.

Examples

The following examples show how to execute an import from a Groovy script. Note: there are many options not illustrated in these examples. Please refer to Import API Reference for all the variations available.

CSV Import Script

This script imports a single CSV file to a specified partition.

import com.illumon.iris.importers.util.CsvImport

import com.illumon.iris.importers.ImportOutputMode

rows = new CsvImport.Builder("Test","Sample")

.setSourceFile("/db/TempFiles/dbquery/staging/data1.csv")

.setDestinationPartitions("localhost/2018-04-01")

.setOutputMode(ImportOutputMode.REPLACE)

.build()

.run()

println "Imported " + rows + " rows."

JDBC Import Script

The following script imports all the records from a SQL Server table into a single partition:

import com.illumon.iris.importers.util.JdbcImport

import com.illumon.iris.importers.ImportOutputMode

rows=new JdbcImport.Builder("Test","Table1")

.setDriver("com.microsoft.sqlserver.jdbc.SQLServerDriver")

.setConnectionUrl("jdbc:sqlserver://myserverhost;database=testdb;user=myuser;password=mypassword")

.setQuery("SELECT * FROM table1")

.setDestinationPartitions("localhost/2018-05-01")

.setStrict(false)

.setOutputMode(ImportOutputMode.SAFE)

.build()

.run()

println "Imported " + rows + " rows."

While the following script imports all records into partitions based on the "Date" column, with the user and password values passed in via the iris_exec command line:

import com.illumon.iris.importers.util.JdbcImport

import com.illumon.iris.importers.ImportOutputMode

rows=new JdbcImport.builder("Test","Table1")

.setDriver("com.microsoft.sqlserver.jdbc.SQLServerDriver")

.setConnectionUrl("jdbc:sqlserver://myserverhost;database=testdb")

.setUser(user)

.setPassword(password)

.setSourceTimeZone("UTC")

.setQuery("SELECT * FROM table1 ORDER BY date")

.setDestinationPartitions("localhost")

.setPartitionColumn("Date")

.build()

.run()

println "Imported " + rows + " rows."

Merge Data Script

The following example assumes the partition is set via a command line argument, and might be executed from the command line as:

import com.illumon.iris.importers.util.MergeData

import com.illumon.iris.importers.ImportOutputMode

new MergeData.builder(db,"Test","MyTable")

.setPartitionColumnValue(partition)

.build()

.run()

println "Done merging!"

The following script might be executed from the command line for the 2018-05-01 partition: (Note: The port is specified to connect to the appropriate Deephaven server for merge operations.)

iris_exec run_local_script -- -s ~/myscripts/merge_single.groovy -p 30002 partition "2018-05-01"

Import API Reference

Each type of import (CSV/XML/JDBC/JSON) has a corresponding class. Each import class contains one or more static builder methods, which produce an object used to set parameters for the import. The builder returns an import object from the build() method. Imports are executed via the run() method and if successful, return the number of rows imported. All other parameters and options for the import are configured via the setter methods described below. The general pattern when executing an import is:

nRows = (Csv|Xml|Jdbc|Json)Import.builder(<namespace>,<table>)

.set<option>(<option value>)

...

.build()

.run()

Common Parameters

The following parameters are common to all import builders:

|

Setter Method |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

setDestinationPartitions |

String |

No* |

N/A |

The destination partition(s). e.g., " |

|

setDestinationDirectory |

File | String |

No* |

N/A |

The destination directory. e.g., |

|

setOutputMode |

ImportOutputMode | String |

No |

SAFE |

Enumeration with the following options: May also be specified as String (e.g., |

|

setStrict |

boolean |

No |

true |

If |

|

setPartitionColumn |

String |

No |

N/A |

Column name to use to choose which partition to import each source row. |

|

setSourceName |

String |

No |

N/A |

Specific |

* Either a destination directory, specific partition, or internal partition plus a partition column must be provided. A directory can be used to write a new set of table files to specific location on disk, where they can later be read with TableTools. A destination partition is used to write to intraday locations for existing tables. The internal partition value is used to separate data on disk; it does not need to be unique for a table. The name of the import server is a common value for this. The partitioning value is a string data value used to populate the partitioning column in the table during the import. This value must be unique within the table. In summary, there are three ways to specify destination table partition(s):

- Destination directory (e.g., .

setDestinationDirectory( /db/Intraday/<namespace>/<table>/localhost/<date>/<table/)) - Internal partition and destination partition (e.g.,

.setDestinationPartitions("localhost/2018-04-01")) - Internal partition and partition column - for multi-partition import (e.g.,

.setDestinationPartitions("localhost").setPartitionColumn("Date"))

CSV Import Builder

The CsvImport.builder method creates a CsvImportBuilder. The options are configured using the setter methods below.

CsvImport.Builder options

|

Setter Method |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

|

String |

No* |

N/A |

Directory from which to read source file(s) |

|

setSourceFile |

String |

No* |

N/A |

Source file name (either full path on server file system or relative to specified source directory) |

|

setSourceGlob |

String |

No* |

N/A |

Source file(s) wildcard expression |

|

setFileFormat |

String |

No |

DEFAULT |

The Apache commons CSV parser is used to parse the file itself. Five common formats are supported:

|

|

setDelimiter |

char |

No |

, |

Allows specification of a character other than the file format default as the field delimiter. If delimiter is specified, |

|

setSkipLines |

int |

No |

0 |

Number of lines to skip before beginning parse (before header line, if any) |

|

setTrim |

boolean |

No |

false |

Whether to trim whitespace from field values |

|

setConstantColumnValue

|

String |

No |

N/A |

A String to materialize as the source column when an

|

* The sourceDirectory parameter will be used in conjunction with sourceFile or sourceGlob. If sourceDirectory is not provided, but sourceFile is, then sourceFile will be used as a fully qualified file name. If sourceDirectory is not provided, but sourceGlob is, then sourceDirectory will default to the configured log file directory from the prop file being used.

XML Import

The XmlImport.builder method create an XmlImportBuilder. The options are configured using the setter methods below.

XmlImport.Builder options

|

Option Setter |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

setSourceDirectory |

String |

No* |

N/A |

Directory from which to read source file(s) |

|

setSourceFile |

String |

No* |

N/A |

Source file name (either full path on server file system or relative to specified source directory) |

|

setSourceGlob |

String |

No* |

N/A |

Source file(s) wildcard expression |

|

setDelimiter |

char |

No |

, |

Allows specification of a character to be used when parsing string representations of long or double arrays. |

|

setElementType |

String |

Yes |

N/A |

The name or path of the element that will contain data elements. This will be the name of the element which holds your data. |

|

setStartIndex |

int |

No |

0 |

Starting from the root of the document, the index (1 being the first top-level element in the document after the root) of the element under which data can be found. |

|

setStartDepth |

int |

No |

1 |

Under the element indicated by Start Index, how many levels of first children to traverse to find an element that contains data to import. |

|

setMaxDepth |

int |

No |

1 |

Starting from Start Depth, how many levels of element paths to traverse and concatenate to provide a list that can be selected under Element Name. |

|

setUseAttributeValues |

boolean |

No |

false |

Indicates that field values will be taken from attribute valued. E.g., |

|

setUseElementValues |

boolean |

No |

true |

Indicates that field values will be taken from element values. E.g., |

|

setPositionValues |

boolean |

No |

false |

When false, field values within the document will be named. E.g., a value called |

|

setConstantColumnValue |

String |

No |

N/A |

A String to materialize as the source column when an |

* The sourceDirectory parameter will be used in conjunction with sourceFile or sourceGlob. If sourceDirectory is not provided, but sourceFile is, then sourceFile will be used as a fully qualified file name. If sourceDirectory is not provided, but sourceGlob is, then sourceDirectory will default to the configured log file directory from the prop file being used.

JDBC Import

The JdbcImport.builder method creates a JdbcImportBuilder. The options are configured using the setter methods below.

JdbcImport.Builder options

|

Option Setter |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

setDriver |

String |

Yes |

N/A |

JDBC driver class. For example,

|

|

setConnectionUrl |

String |

Yes |

N/A |

JDBC connection string |

|

setUser |

String |

No |

N/A |

JDBC user (if not specified in connection string) |

|

setPassword |

String |

No |

N/A |

JDBC password (if not specified in connection string) |

|

setQuery |

String |

Yes |

N/A |

SQL query to execute for import |

|

setSourceTimeZone |

TimeZone |

No |

Server TZ* |

Time zone to be used when interpreting time & date values that do not have explicit offsets. |

* Unless the source time zone is specified, the JDBC import task attempts to read the server time zone/UTC offset prior to executing the query. The source time zone is used to interpret SQL "datetime", "date", and "time-of-day" values, unless those types explicitly contain an offset. Since Deephaven presently has no "date" type, "date" values are interpreted as a datetime at midnight in the server time zone.

JSON Import

JSON is imported using a recursive flattening of each JSON object (one top level JSON object per row). The input file should contain plain JSON objects, not comma separated or an array. For example, the following is a legal file with three objects, which will result in three rows:

{a:1} {a:2} {a:3}

You may have multiple JSON objects on a single line, and/or the JSON objects may span multiple lines.

Nested objects are recursively expanded, with the column name for each field derived from the full "path" to that field. The path separator is specified as a builder parameter. Array elements are numbered with a zero-based index. For example, the following maps to a schema with the columns "a", "b_c", "b_d", "e0", "e1", "e2" and a row with values 1, 2, 3, 4, 5, 6 (the path separator used here is "_"):

{ "a":1, "b":{ "c":2, "d":3 }, "e":[4,5,6] }

The JsonImport.builder method builds a JsonImportBuilder. The options are configured using the setter methods below.

JsonImportBuilder options

|

Option Setter |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

setSourceDirectory |

String |

No* |

N/A |

Directory from which to read source file(s) |

|

setSourceFile |

String |

No* |

N/A |

Source file name (either full path on server file system or relative to specified source directory) |

|

setSourceGlob |

String |

No* |

N/A |

Source file(s) wildcard expression |

|

setMaxInferItems |

Long |

No |

No Limit |

Maximum number of JSON objects to examine when inferring which columns are present in the file. Inference is necessary because JSON has no internal "schema". |

|

setColumnNames |

String | List<String> |

No |

N/A |

Column names to extract from source data. |

|

setFieldPathSeparator |

TimeZone |

No** |

N/A |

String to use when generating column names from nested JSON data. |

| setConstantColumnValue | String | No | N/A | A String to materialize as the source column when an ImportColumn is defined with a sourceType of CONSTANT |

* Unless the source time zone is specified, the JDBC import task attempts to read the server time zone/UTC offset prior to executing the query. The source time zone is used to interpret SQL "datetime", "date", and "time-of-day" values, unless those types explicitly contain an offset. Since Deephaven presently has no "date" type, "date" values are interpreted as a datetime at midnight in the server time zone.

** If nested JSON is present in the source file, you must specify a field path separator.

Merge API Reference

The Merge API is fairly simple, and analogous to the Import API. There is a single MergeData class with a builder method that returns a MergeDataBuilder object. MergeDataBuilder will produce a MergeData object when build() is called. Note that this MergeData class is in the com.illumon.iris.importers.util package (there is another MergeData class in the com.illumon.iris.importers package that is not intended for use from a script). Then the merge is executed via calling the run() method on the MergeData object. is used to build the merge. The general pattern for performing a merge is:

MergeData.builder(db,<namespace>,<table>)

.set<param>(<param value>)

...

.build()

.run()

MergeDataBuilder options

|

Option Setter |

Type |

Req? |

Default |

Description |

|---|---|---|---|---|

|

setPartitionColumnValue

|

String |

No* |

N/A |

A literal string used to select the column partition to merge. Often a date, e.g.. " |

|

setPartitionColumnFormula

|

String |

No* |

N/A |

An expression that will be evaluated to specify the partition to merge. For example |

|

setThreadPoolSize

|

int |

No |

4 |